Supply chain attacks on the software industry are becoming common. The apps we write have very long and deep dependency chains, and any component that’s subverted by an adversary can do anything the app itself may do. As many widely used components are authored pseudo-anonymously on laptops that are easily hacked, we’re kinda asking for trouble. It’s only surprising it took this long.

Maybe the solution lies in sandboxes with capabilities: opaque objects that encapsulate the ability to do something. If your program hasn’t been given one then you aren’t able to do the action it protects. Think of them like magical keys: you can’t forge them, but you can clone them and (in some systems) reduce the power of the clone whilst you do.

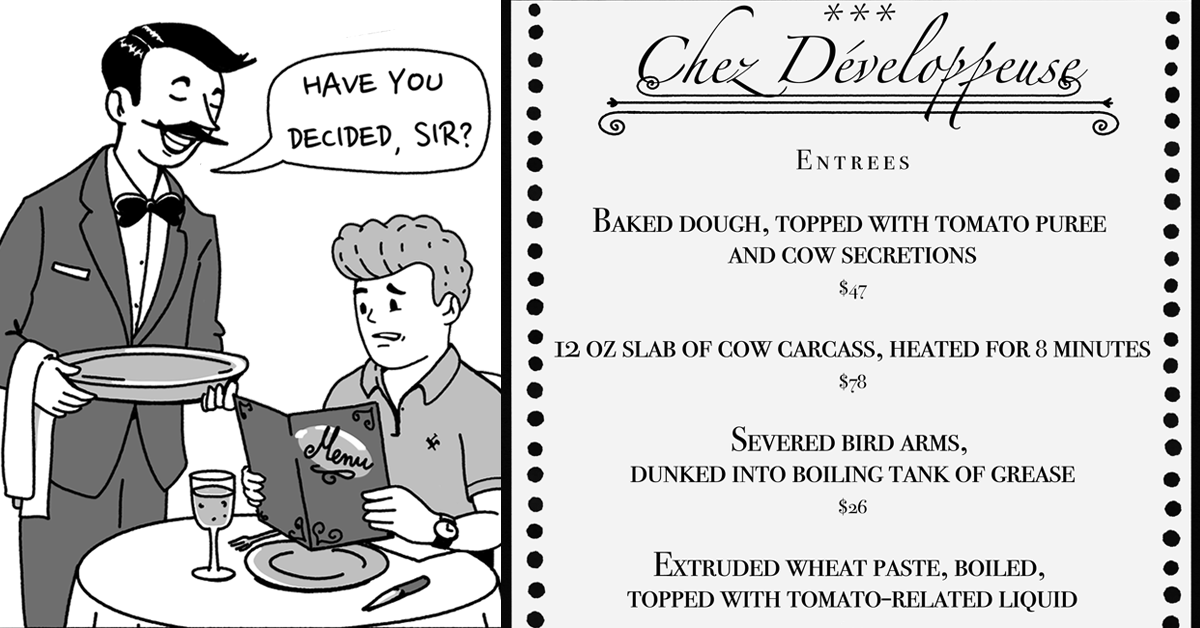

We already regularly use capabilities: handles, file descriptors and JWT tokens are all examples under differe names, but we don’t use them within individual programs. Changing that is a popular idea, especially on Hacker News. It was first proposed by Morris in the 1970s, and here it is again a few days ago:

What would it take to make this finally real? Do we really need yet another whole new programming and library ecosystem?

In this essay I want to show you the challenges that you’ll face if you want to walk that path. This isn’t meant to put anyone off, just to draw a map of the territory you’re about to enter and explain why it’s currently deserted. As we go I’ll introduce two very different takes on the concept: the history of capabilities in Java, and Chrome’s Mojo object-capability system.

Here are some problems you’ll have to solve in order to sandbox libraries:

- What is your threat model?

- How do you stop components tampering with each other’s memory?

- Do you inter-twingle your plan with other unrelated requirements, like cross-language interop?

- How much of humanities existing codebase can you reuse, if any?

Let’s start with the threat model, or stated more plainly, what exact problem are you trying to solve? Sandbox designers often disagree on how far they need to go. Do you care about resource exhaustion or DoS attacks? For example, is it OK if a library can call exit(0) and tear down your process, or deliberately segfault it? Do you care about Spectre attacks? Maybe!

Every time you make your threat model stronger it becomes harder to work with. The right thing to do here is deeply unclear.

Regardless of what model you choose, libraries expect to share memory with the code that uses them. An object capability system therefore can’t just be dropped in to any old language! It must be carefully designed to provide what the Java team calls integrity— one bit of code must not be able to overwrite state depended on by another. Guaranteeing this property turns out to be hard, and in fact very few languages even attempt to provide it. C/C++ don’t. Dynamic scripting languages like Python or Ruby don’t, because you can actually change the rules of the language on the fly by monkey-patching things in the standard library. JavaScript also doesn’t really support it: Google sponsored a research project called Caja for a while that was able to rewrite Javascript into a form that was claimed to have integrity, but it had a lot of exploits and they eventually gave up.

The requirements can be best understood by reading a paper from 2004 describing a language called Joe-E.

The Joe-E language is a subset of Java designed to support pure capability programming within a shared address space. Java has the basics already: there’s no pointer arithmetic, you can’t monkey patch things, the language rules are fixed, and objects have enforced visibility rules. By looking at what Joe-E takes away from Java, we can see what more is needed.

Some things you have to remove are obvious enough:

- Reflection that can write to private fields or call private methods.

- Global methods in the standard library that grant ambient authority.

- Native methods.

And some are less so:

- Any mutable global variable is a problem as it may allow one component to violate expectations held by another. Joe-E forbids mutable global state.

- Exceptions might leak capabilities and are restricted.

- Finalizers are removed.

- The finally keyword is removed.

That’s a pretty radical departure from regular Java, and is why Joe-E is considered a subset language. You can’t just take an existing Java library and use it from Joe-E code. The biggest difficulty by far is what they called “taming” the standard library, meaning finding all the places where ambient authority is granted and blocking them.

It’s worth noting that although Joe-E was a research project, some of these changes are being adopted into Java proper for apps that use the module system:

- You’ll soon be required to specify which modules can use native code.

- You’re already required to specify which modules can use ‘deep’ reflection.

- Finalizers have already been removed.

By itself this isn’t enough for a pure capability language, and the removal of the SecurityManager means there’s no official way to “tame” the standard library, so by itself those changes doesn’t help much. But it’s an interesting direction of travel.

If you think through the consequences of the pure capability design some usability issues become apparent.

The first is that if you want the entire application to be written in an object-capability style then its main() method must be given a “god object” exposing all the ambient authorities the app begins with. You then have to write proxy objects that restrict access to this god object whilst implementing standard interfaces. No language has such an object or such interfaces in its standard library, and in fact “god objects” are viewed as violating good object oriented design.

With no ambient authority, every resource a library or its dependencies might need to use has to be passed in by the caller. And therefore, changing what permissions are needed implies a backwards-incompatible change to the API itself.

This is a problem because many users of a library won’t care about sandboxing. They may have good reasons to not care — perhaps they trust you because of who you work for, or their data isn’t sensitive, or they use some other security mechanism, or they just want to get their job done ASAP. If you constantly stop their code compiling with API changes that don’t benefit them, they’re going to get upset and switch to a competing library. How often you need to request new permissions will depend heavily on the design of your language and standard library, so be careful. For example, a library reading a new data file it ships with shouldn’t require the user to supply new permissions (Java libraries are zips that can contain data files, so this is handled there).

Another problem is configurability. It’s common for libraries to read global state, for instance, environment variables that turn on logging. None of this is allowed in a capability system: the caller of the code has to read environment variables and config files for it.

The God Object problem is why the Java SecurityManager architecture wasn’t a pure capability system. It enabled capabilities, but it didn’t require them. In the SecurityManager design all code starts with ambient authority. Each method is associated with a module, and modules can have ambient permissions taken away, leaving them either unable to do that operation or only able to do it if they’re given an object capability.

It works like this: when one module calls into another that is guarding some possibly sensitive operation (e.g. the standard library) the guard does a stack walk to look at all the code calling into it, intersecting their privileges. This design had at least three advantages:

- People who didn’t care about sandboxing could ignore it.

- The needed permissions could be described with a simple config file instead of code or docs. This made it easy to ship canned profiles that showed developers what permissions a library might need in different situations, and then let them customize it to minimize privilege.

- You could create object capabilities by defining classes in privileged modules with a safe interface and then “asserting privilege”, which truncated the stack walk at that frame.

The design was elegant and theoretically sound, but plagued with practical usability problems and bugs. One issue was the baffling number of ambient permissions a program actually has. A quick look at the NetPermission class — one of many — reveals stuff like this:

Did you ever think about the HTTP TRACE method? Probably not, but the Java sandbox designers did because doing a TRACE request is potentially harmful and thus requires a permission.

Most languages let you do HTTP requests. In a system with only object capabilities, how would you restrict access to TRACE whilst allowing GET on a domain? This becomes especially tricky when the authors and users of a library are separated. The user must supply the library with an HTTP client, and that client has to be either configurable or proxyable in order to turn it into an object capability, and the user must understand exactly what’s required by the library. Type systems don’t help: you can’t express a type like “HTTP client that allows access to example.com but nowhere else” (ok maybe in TypeScript, but TypeScript doesn’t have a VM that enforces its rules so that’s useless for security).

The SecurityManager design solved all of this. If a library needed access to a specific website it could just come with a config file that listed that domain in a NetPermission, the user could quickly audit it before using the library, the library would request an HTTP client purely internally and everything just worked. If the overhead of sandboxing wasn’t wanted it could be eliminated by the JIT compiler.

So why did the SecurityManager die then, just as supply chain attacks were starting to become real? You can read the official rationale or my summary of it:

- Java has a lot of features.

- Maintaining permission checks for all those features was expensive.

- It was hard to avoid exploits.

- Nobody cared enough about supply chain attacks to use sandboxing anyway.

- Even the few who did it use were using it wrong.

Well, an expensive thing that breaks a lot and nobody uses is an obvious target for cost savings… and there it is. Even with the usability advantages it gave over pure capability systems, in-process sandboxing was still too much effort for developers to bother with. Supply chain attacks are still rare enough that defensive measures just aren’t at the top of most backlogs and probably won’t be for some time.

There’s another reason the SecurityManager was abandoned. Speculative execution attacks allow any code to read the entire address space without any permissions, even in the face of languages designed to stop that. Hacks like reducing the resolution of timers help but don’t entirely fix it, and come at great cost. At this point the designers of most software sandboxes cried out in despair and just gave up, declaring that only hardware-enforced address spaces could properly constrain software components.

I personally think this belief isn’t quite true. Reading data is only useful in real-world attacks if you can exfiltrate it somewhere, or if you can steal a credential that lets you escalate your privilege. Many analyses of software security stop and declare victory when any bytes have been read at all, without stopping to ponder where those bytes will go next. That isn’t totally unreasonable because in the web context the ability to exfiltrate data is an ambient permission, but if we’re talking about sandboxing libraries then it’s a very different matter. Most libraries don’t need permissions to do write IO, and so even if they somehow did a Spectre attack undetected (hard) they couldn’t easily send the data they’d read back to the adversary.

Still. How about hardware isolation? File descriptors are a kind of capability provided by the kernel, but a rather odd and inflexible kind. They aren’t a great example of object capabilities.

Windows does slightly better, with its kernel’s notion of the ‘object manager’ and a more generalized notion of handles.

But the best example is Chrome.

A running Chrome is actually a local network of microservices communicating via a protocol called Mojo. Mojo is quite interesting. It lets you bind objects to “message pipes” that connect processes, which turns them into object capabilities. Pipes are unforgeable and can be sent through other pipes, allowing an object in one process that has a certain set of kernel permissions to be exposed to other processes that have fewer permissions. A running Chrome is a whole graph of objects pointing between services. Mojo abstracts object location (services can run in separate processes or in-process without code changes) and also offers optimizations like shared memory. The Chrome sandbox then lowers the ambient authority of each process to the minimum needed for its task. Objects are moved into sandboxes with a simple annotation.

Whilst it sounds easy, there’s at least four catches. Firstly, given the importance of kernel sandboxing to the web and how long browsers have used it, you might imagine that it’s backed by a mature set of powerful APIs. But you’d be wrong. Not one OS has a sandboxing API that is all of documented, stable and usefully powerful. Nor are the approaches used by Linux, macOS and Windows even slightly similar. So how does Chrome do it? The answer is lots of reverse engineering of OS internal APIs, a willingness to react fast to incompatible OS upgrades, being too big to fail, and finally, lots of very platform specific code with not much abstraction.

The second catch is that Mojo isn’t easily reused — there’s no standalone version you can download and use in your own projects. Nonetheless, if you want an object capability system that blocks speculation attacks, the Chrome codebase is a good place to start. It also comes with a large library of code designed to run without ambient authority, albeit all written in C++ and without stable APIs.

The third catch is that Mojo is really an RPC system. Services can share memory but only in large blocks, and only very carefully. It doesn’t offer any specific support for managing object lifetimes across processes. Memory management protocols must be carefully designed to avoid inter-process resource leaks or refcount cycles. The fact that there’s a security boundary in place requires constant attention whilst designing your code.

The final catch is that context switches have a high cost, especially on Windows. Can we reduce the overhead? CPUs that have support for memory protection keys (MPKs) can, with enough work, switch memory protection contexts much faster than a typical context switch would. This requires careful validation of all native code (including JIT compiled code) to ensure it doesn’t contain writes to the MPK register, but it can be done.

Object capability systems offer the promise of sandboxed libraries and a big advance in the war against supply chain attacks, but they’re hard to build. The best example of research into making a mainstream pure object capability language is Joe-E, a long since defunct subset of Java. The best example of a real-world object capability system is Chrome.

How to make library sandboxing easy enough that people actually adopt it is still an open question. I have some ideas, but none are fleshed out enough to implement today. Maybe I’ll write about that another day. Until then, I hope this quick tour of the territory was useful!

English (US) ·

English (US) ·