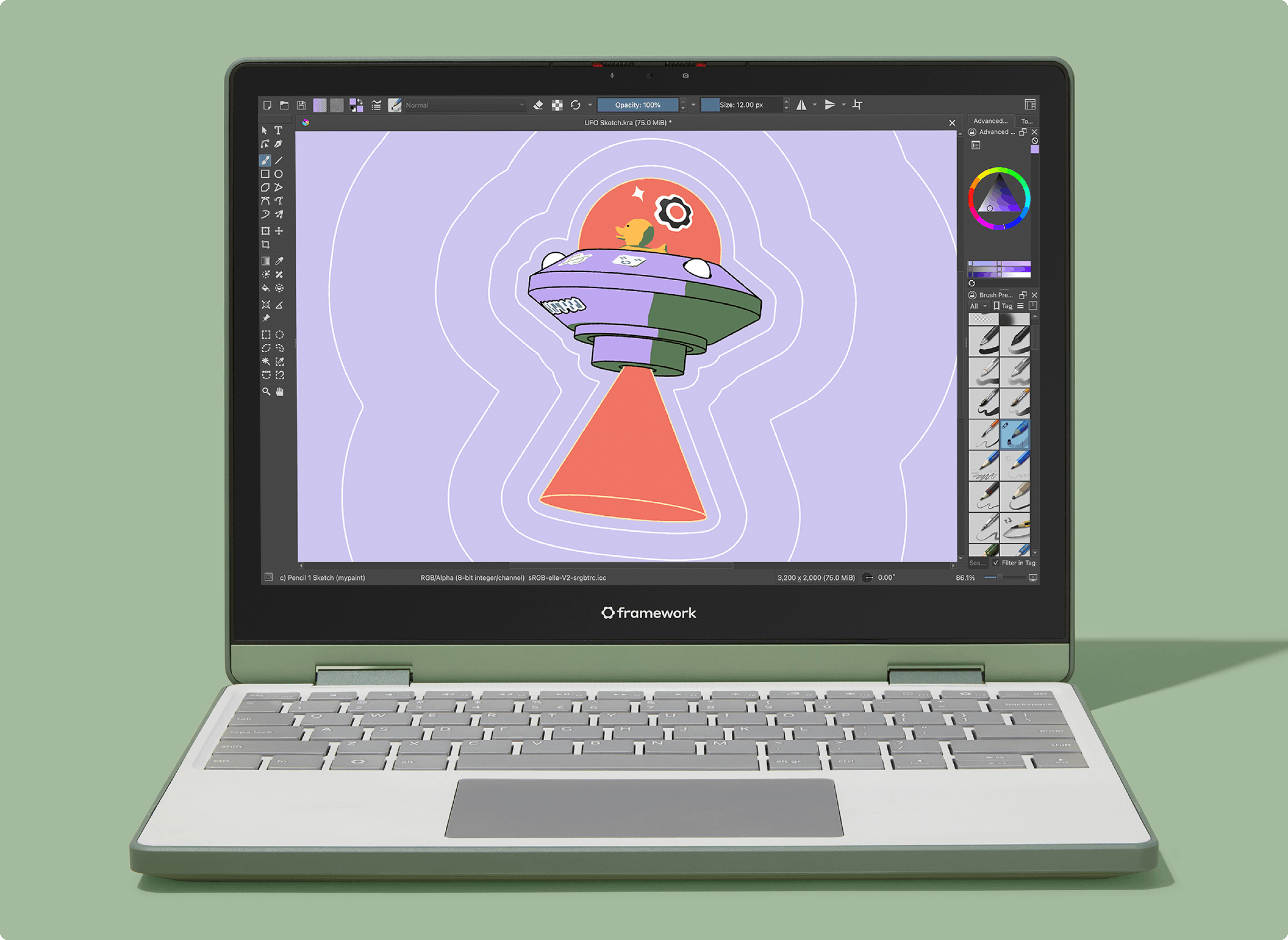

Control 3D models using hand gestures and voice commands in real-time.

An interactive web app built with threejs, mediapipe computer vision, web speech API, and rosebud AI.

- Say "drag", "rotate", "scale", or "animate" to change the interaction mode

- Pinch fingers to control the 3D model

- Drag/drop a new 3D model onto the page to import it (GLTF format only for now)

- Modern web browser with WebGL support

- Camera / microphone access

- Three.js for 3D rendering

- MediaPipe for hand tracking and gesture recognition

- Web Speech API for speech recognition

- HTML5 Canvas for visual feedback

- JavaScript for real-time interaction

# Clone this repository

git clone https://github.com/collidingScopes/3d-model-playground

# Navigate to the project directory

cd 3d-model-playground

# Serve with your preferred method (example using Python)

python -m http.server

Then navigate to http://localhost:8000 in your browser.

MIT License

- Three.js - https://threejs.org/

- MediaPipe - https://mediapipe.dev/

- Rosebud AI - https://rosebud.ai/

- Quaternius 3D models - https://quaternius.com/

You might also like some of my other open source projects:

- Threejs hand tracking tutorial - Basic hand tracking setup with threejs and MediaPipe computer vision

- Particular Drift - Turn photos into flowing particle animations

- Liquid Logo - Transform logos and icons into liquid metal animations

- Video-to-ASCII - Convert videos into ASCII pixel art

- Instagram: @stereo.drift

- Twitter/X: @measure_plan

- Email: [email protected]

- GitHub: collidingScopes

If you found this tool useful, feel free to buy me a coffee.

My name is Alan, and I enjoy building open source software for computer vision, games, and more. This would be much appreciated during late-night coding sessions!

English (US) ·

English (US) ·