Among motorcyclists, there is a persistent rumor that Teslas are dangerous to ride around in traffic. Whether it’s their silent electric drivetrain, extreme acceleration, or self-driving technology supposedly failing to see motorcycles, every biker seems to know someone who’s had a close call with a Tesla.

Our new analysis of data from the NHTSA on self-driving crashes proves this rumor to be true. Since 2022, Teslas in self-driving mode have been involved in five fatal motorcycle crashes (or more).

It’s not just that self-driving cars in general are dangerous for motorcycles, either: this problem is unique to Tesla. Not a single other automobile manufacturer or ADAS self-driving technology provider reported a single motorcycle fatality in the same time frame.

So, why are Teslas involved in self-driving crashes that kill motorcyclists? Let’s interrogate the data and get to the bottom of it.

TL;DR: Self-Driving Teslas Rear-End Motorcyclists, Killing at Least 5

Brevity is the spirit of wit, and I am just not that witty. This is a long article, here is the gist of it:

- The NHTSA’s self-driving crash data reveals that Tesla’s self-driving technology is, by far, the most dangerous for motorcyclists, with five fatal crashes that we know of.

- This issue is unique to Tesla. Other self-driving manufacturers have logged zero motorcycle fatalities in the same time frame.

- The crashes are overwhelmingly Teslas rear-ending motorcyclists.

Self-Driving Teslas Are Fatally Striking Motorcyclists From Behind

I learned to ride a motorcycle on a military base, and the sergeant was brutally honest.

He said: “If you’re going to die on the bike, it’s going to be your own fault.”

Welcome to being a motorcyclist. Our margin of error is slim, and mistakes are easily fatal. It’s rough out here on two wheels. Helmet and jacket, strongly encouraged.

Rider error, while tragic, is a fact of life. So the first item to rule out is whether the motorcyclists themselves are causing these accidents.

The good news: we can largely rule out rider error in these crashes. The NHTSA reports the crash location on the self-driving vehicle, so we can tell whether the Tesla was t-boned or rear-ended.

That’s not the case for the self-driving Tesla motorcycle fatalities reported to the NHTSA.

According to the NHTSA data:

- 3 out of 5 cases show the Tesla sustained front-end impacts, or front and left-side impacts, or (grimly) front and bottom impacts.

- 2 out of 5 cases had crash direction marked as “unknown” in the manufacturer’s reporting to the government.

Those two “unknown” cases are both in Florida. One of them, in Boca Raton, was a Tesla rear-ending a motorcycle stopping at a traffic light. The final Florida case has fewer details available.

That means that, in 100% of Tesla’s fatal self-driving motorcycle crashes where we know the direction of travel, the Tesla was behind the motorcyclist before impact. The self-driving Teslas are fatally striking the motorcyclists from behind.

We’d Love to Tell You More, But Tesla’s Self-Driving Data Is Redacted

It would be helpful to have the official crash narratives for context. The NHTSA requires that automobile manufacturers and self-driving technology providers offer that context in their governmental crash reporting.

Tesla is unique in that they request all of their narrative data be redacted, along with many other data points, citing “confidential business information” or “personally identifiable information” about the victim.

Learn more about that here: Tesla Asked for Redactions on Every Self-Driving Crash Reported to the NHTSA Since 2021

This limits journalists’ ability to see the full picture behind these crashes and what might be causing them.

Speaking of investigations… why did the Department of Government Efficiency (DOGE) cut the NHTSA’s self-driving safety office staff by almost half? Why cut back on the small office capable of investigating Tesla’s unredacted safety data? Seems like an odd place to start cutting costs.

Anyway, here is how we constructed our study.

A Note on Methodology

To be included in the NHTSA’s self-driving crash reporting, known as the “Standing General Order,” the car’s self-driving technology must have been active within 30 seconds of the crash.

We don’t have access to internal Tesla data, which could tell us whether self-driving was still active at the moment of impact. We can only infer from what we do have.

This is good and bad: bad in that it is not as granular as we would like, but good in that it is standardized. Every manufacturer is held to the same standard.

For the rest, we’ve tracked down each crash in local news reporting. Read those narratives below.

Filling in Tesla’s Self-Driving Crash Redactions with Press Coverage

It’s hard to hide the truth in the 21st century. We have city names and months for each fatal motorcycle crash involving a Tesla in self-driving mode. Most of the other significant data is redacted at Tesla Inc’s request, but I’ve done more with less. That’s enough to start connecting the dots with public news reports and police reports.

Below, I’ve linked publicly available reporting for each case to support our conclusions above. The details are distressing, so if you’d rather avoid reading about fatal car accidents, skip ahead to my conclusions.

April 2024, Snohomish WA:

NHTSA Report ID 13781-7567.

The self-driving subject vehicle is a 2022 Tesla Model S. The Tesla fatally struck a motorcyclist from behind in low-speed stop and go traffic on a highway near Seattle.

The driver reports that he was looking at his cellphone with his Tesla’s Full-Self Driving mode engaged. Allegedly, the driver heard a bang, his Tesla suddenly accelerated, and he struck the unmoving motorcyclist in front of him from behind, pinning the motorcyclist under the Tesla.

Interestingly, the Washington State Highway Patrol determined that Full-Self Driving was active not from a governmental collaboration with the NHTSA or similar, but by downloading the data directly from the Tesla’s computer after seeking a warrant.

The driver was arrested for vehicular homicide, due to being distracted by his cellphone. Documents here with witness statements in the police reports:

https://www.opb.org/article/2025/01/15/tesla-may-face-less-accountability-for-crashes-under-trump

Of further interest, the Tesla feature to brake after a crash has occurred (automatic emergency braking) can be disengaged by pressing the accelerator pedal. The Tesla’s onboard data reports that the accelerator pedal was pressed 95-100% continuously for 10 seconds after the collision. A witness reports that the Tesla’s front wheels were spinning while up in the air, which means that no brake was being applied, even after the impact where the motorcyclist was pinned beneath the vehicle.

This is a tragically easy mistake to make, for a driver dazed and confused in a crash situation. It’s amazing that no technical system enforces braking in a more difficult-to-override way when sensors detect a crash has occurred.

August 2023, Green Cove Springs FL:

NHTSA Report ID 13781-6205.

The self-driving subject vehicle is a 2023 Tesla Model Y. Details are extremely scant, just what is unredacted in the NHTSA reporting. I really wish we had access to the unredacted data!

This one, I do not include in our fatality totals. The injury severity was reported to the NHTSA as “unknown.” The airbags on the Tesla deployed during a crash involving a motorcycle, which is consistent with serious or fatal injuries to motorcycle riders, but we just can’t know for sure with the data redactions.

I couldn’t find any local news coverage for the crash in rural Florida in several hours of trying. If anybody knows more about this case, please let me know on the Contact page!

I include this case to point out: there are countless Tesla self-driving crashes where the data is so heavily redacted that it is simply not possible to tell what is going on. That’s why I say there are “at least 5” self-driving Tesla accidents that have killed motorcyclists, I highly suspect that some of those “unknown” or redacted data points are motorcycle crashes.

August 2022, Boca Raton FL:

NHTSA Report ID 13781-3713.

The self-driving subject vehicle is a 2020 Tesla Model 3. The Tesla fatally struck a motorcyclist from behind at above 100MPH on a 45MPH speed limit road in suburban Boca Raton. The motorcyclist was properly stopping at a yellow light, according to police reports.

The driver was arrested for vehicular homicide and DUI manslaughter charges just under a year after the fatal crash. He plead guilty, reducing a potential sentence of 30 years to just 2 years in prison. In his guilty plea, he specified that he was relying on Tesla’s self-driving technology while intoxicated.

https://www.cnn.com/2022/10/17/business/tesla-motorcycle-crashes-autopilot/index.html

You might wonder how a vehicle with self-driving technology was traveling more than double the speed limit into a yellow light with a motorcyclist braking in front of them. This Reddit thread explains the feature.

Certain Tesla self-driving technologies are speed capped, but others are not. Simply pressing the accelerator will raise your speed in certain modes, and as we saw in the police filings from the Washington State case, pressing the accelerator also cancels emergency braking.

That’s how you would strike a motorcyclist at such extreme speed, simply press the accelerator and all other inputs are apparently overridden.

July 2022, Bluffdale UT:

NHTSA Report ID 13781-3488.

The self-driving subject vehicle is a 2020 Tesla Model 3. The Tesla fatally struck a motorcyclist from behind on a Utah highway. Final speed of the Tesla prior to crashing into the motorcyclist was reported to the NHTSA was 61MPH.

https://kjzz.com/news/local/motorcyclist-dies-after-being-hit-by-tesla-reportedly-on-autopilot

The driver reports that they did not see the motorcyclist, which will sound immediately familiar to any of my readers who are motorcyclists. As is typical in fatal motorcycle crashes with no exacerbating human factors like alcohol or distraction, we could find no record that charges were filed.

This accident was probed by the NHTSA’s investigation teams.

https://www.insurancejournal.com/news/midwest/2022/08/08/679062.htm

April 2022, reported to the NHTSA as an unknown city in Florida:

NHTSA Report ID 13781-3470.

The self-driving subject vehicle is a 2020 Tesla Model Y.

I can find literally zero news reporting on this fatal accident involving a Tesla self-driving vehicle, so we need to rely on the NHTSA data to assemble the narrative. It’s starting to become apparent why this data is so heavily redacted, connecting the dots is difficult!

The Tesla was traveling straight on a highway when it was involved in a fatal crash with a motorcycle. No further details are available. This is the only motorcycle fatality reported to the NHTSA where we are not certain that they were rear-ended by the Tesla.

The address of the crash was redacted as “[MAY CONTAIN PERSONALLY IDENTIFIABLE INFORMATION]” which limits our ability to locate the narrative details.

July 2022, Riverside CA:

NHTSA Report ID 13781-3332.

The self-driving subject vehicle is a 2021 Tesla Model Y. The Tesla fatally struck a motorcyclist from behind in the HOV lane on a California freeway.

https://techxplore.com/news/2022-08-agency-probes-tesla-motorcyclists.html

This is an alarmingly common trend in our analysis. Not an edge-case, no difficult weather or lighting conditions, simply a self-driving Tesla killing a motorcyclist by rear-ending them at speed.

This accident was probed by the NHTSA’s investigation teams.

https://www.insurancejournal.com/news/midwest/2022/08/08/679062.htm

Engineering Failures and Human Error Cascade to Cause Fatal Self-Driving Accidents

Self-driving Teslas developed such a reputation for ramming into parked emergency vehicles from behind that the NHTSA launched an investigation in 2022. Confusingly, the NHTSA is currently investigating whether the subsequent recall was effective. What the agency uncovered through close analysis of the unredacted self-driving crash data went far beyond firetrucks and police cruisers.

As part of its review, the Office of Defects Investigation (ODI) at the NHTSA found that Teslas had a general tendency to experience “frontal plane crashes.” Essentially, driving straight into obstacles in their path.

The issue wasn’t limited to emergency vehicles. Teslas using self-driving software were crashing into all sorts of things: barriers, stopped cars, and motorcycles.

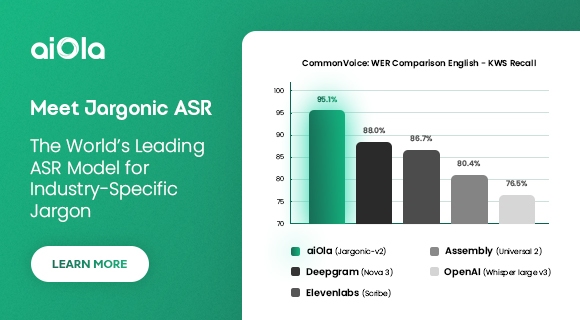

The NHTSA extensively reviewed Tesla’s self-driving crash data, which it has unredacted access to. The findings? A vast majority of these crashes occurred in situations where a visible hazard should have been detected and avoided well before impact.

Self-Driving Teslas Have Plenty of Time to Avoid Frontal-Plane Crashes… They Just Don’t

Here’s a telling table from the NHTSA report, showing that fully 93% of hazards in crashes would have been visible to a human in time to either avoid the accident entirely or at least take action to mitigate the severity of the accident:

The government’s report has this damning quote, couched in dense bureaucratic language:

“In certain circumstances when Autosteer is engaged, if a driver misuses the SAE Level 2 advanced driver-assistance feature such that they fail to maintain continuous and sustained responsibility for vehicle operation and are unprepared to intervene, fail to recognize when the feature is canceled or not engaged, and/or fail to recognize when the feature is operating in situations where its functionality may be limited, there may be an increased risk of a collision.”

In the case of the motorcycle crashes, the pattern is clear: Tesla’s self-driving technology either fails to detect the motorcyclist or does not apply the brakes once the motorcycle is detected. The human driver, meanwhile, is responsible for stepping in and braking manually… a task they fail to complete, because they are not paying attention, a situation which the Tesla’s attention detection system has also failed to recognize. When both the technology and the human backup fail, the result is a fatal collision.

This is a natural and enragingly obvious outcropping of Level 2 self-driving technology. Of course drivers will get bored of watching their car drive itself for hours on end. Simply having a hand on the wheel is not a sufficient measure of the driver paying attention, and Tesla’s other technical measures apparently do not work reliably. Motorcyclists pay for that stupid design decision with their lives.

Watch a Tesla Freak Out in FSD Mode Behind a Motorcyclist

Sometimes, seeing the technology in action helps illustrate what’s going wrong. In this video, a Tesla running a recent version of its unfortunately named “Full Self-Driving” (FSD) software behaves erratically when following a motorcycle.

The Tesla appears to struggle with maintaining a consistent follow distance. At times, it leaves an absurdly long gap between itself and the motorbike, which could be an indication that the system is compensating for weak sensor performance when detecting range on motorcycles.

The video creator even calls attention to the odd behavior, and the comment section is full of people reporting similar experiences… along with the usual Tesla defenders who downplay or dismiss the issue, or attack the video as a fraud. Whether those defenders are diehard fans, bots, or a mix of both is up for debate.

The bottom line? Tesla’s self-driving technology has serious issues with detecting and responding safely to motorcycles. When the system struggles, it leaves human drivers to pick up the slack when they have been lulled into inattention by a false sense of competency from the self-driving system. That’s a deadly combination, as evidenced by five (or more) fatal Tesla collisions with motorcyclists.

Of course, all of us are watching Tesla’s planned rollout of self-driving taxis with no human driver, slated for Austin in less than 60 days. If you’re a motorcycle rider like me, you might avoid the city limits of Austin TX for a minute, just to see how things shake out.

English (US) ·

English (US) ·