Since early 2022, Motion was on CockroachDB. Cockroach has many qualities going for it: effortless horizontal scaling, especially when dealing with multi-region set ups, extremely high availability, and a SQL-compatible interface. Early on, there were concerns about the eventuality of a multi-region setup (mandated by GDPR), and how exactly a traditional set up on Postgres would scale.

However, as Motion grew, so did our usage and costs. By 2024, Motion’s CockroachDB bill had 5x-ed to the mid 6 figures, and some of the cracks were beginning to show. None of our customers had required data localization yet, and we were still in a single region doing fairly simple transactional queries — so why pay the cost of a distributed database at all?

Fortunately for us, we had an ORM that made testing head to head relatively trivial.

As the size of our database grew, we would frequently get into situations where Prisma would simply time out when applying migrations. We’d then have to log into Cockroach and manually run migrations one by one, concurrently of course.

When I finally moved us to Postgres, I tested a very similar migration head to head (the name of the column is different, just for demo purposes). Here. is Postgres applying this migration on a rewound instance of our database (to control for data size) in ten seconds.

The timeouts were leading to operational shortcuts. By 2024, the timeouts had become so acute that even really strong developers were actively doing things outside the DB purely out of fear of locking the entire system.

Even worse, the migration issues were proving to be a blocker to upgrade even Cockroach versions. We were stuck on version 22 well past the end of life, which made support even slower to reply to us.

In the end, we ended up just giving up and opting to move to Postgres, remaining on the defunct version 22 until the end.

The timeouts began affecting things outside of migrations — namely, ETL.

We’d become accustomed to waking to pages like this one:

Even when the ETL jobs worked and did not time out, the performance was brutal.

Unfortunately, there’s very little support for any real ETL solutions that offer CRDB replication. As of this writing, there is still only Airbyte’s connector (which was in alpha in 2024), and we discovered only after implementing that the connector had a memory leak.

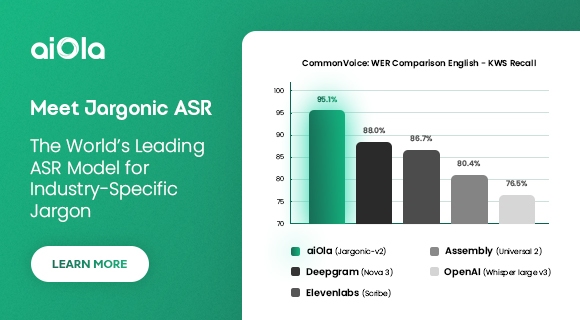

The query head to heads were interesting. Some queries were indeed faster on Cockroach, thanks to their optimizer:

For queries like the above, Cockroach would resolve in ~13 seconds while Postgres would take up to 20 seconds. It seems like the Cockroach query planner innately understands these queries and does aggregation while Postgres naively ends up doing a near full table scan. (This advantage seems to go away when comparing Drizzle instead of the SQL generated by Prisma. More on that in a separate blog post.)

But there were many situations when the “magic” of the Cockroach query planner seemed to cut the other way. Here is the Prisma code and the generated SQL, for which Cockroach opts to do a full table scan while Postgres does not:

The vast majority of our real world queries seemed to follow this general pattern where Prisma generates very convoluted SQL with numerous inclusions of various columns and joins. That, combined with the magical Cockroach optimizer, ultimately resulted in extremely high latencies. Here’s a real example of a query that is twenty times faster in Postgres than Cockroach.

SELECT"teamTasks"."id",

"teamTasks"."name",

"teamTasks"."description",

"teamTasks"."dueDate",

"teamTasks"."duration",

"teamTasks"."completedTime",

"teamTasks"."assigneeUserId",

"teamTasks"."priorityLevel",

"teamTasks"."statusId",

"teamTasks"."projectId",

"teamTasks"."createdByUserId",

"teamTasks"."createdTime",

"teamTasks"."updatedTime",

"teamTasks"."lastInteractedTime",

"teamTasks"."archivedTime",

"teamTasks"."workspaceId",

"teamTasks"."minimumDuration",

"teamTasks"."scheduledStart",

"teamTasks"."scheduledEnd",

"teamTasks"."startDate",

"teamTasks"."isChunkedTask",

"teamTasks"."isUnfit",

"teamTasks"."type",

"teamTasks"."needsReschedule",

"teamTasks"."deadlineType",

"teamTasks"."schedule",

"teamTasks"."parentChunkTaskId",

"teamTasks"."parentRecurringTaskId",

"teamTasks"."rank",

"teamTasks"."isFixedTimeTask",

"teamTasks"."slug",

"teamTasks"."previousSlugs",

"teamTasks"."endDate",

"teamTasks"."isBusy",

"teamTasks"."isAutoScheduled",

"teamTasks"."ignoreWarnOnPastDue",

"teamTasks"."scheduleOverridden",

"teamTasks"."snoozeUntil",

"teamTasks"."manuallyStarted",

"teamTasks_chunks"."data" AS "chunks",

"teamTasks_labels"."data" AS "labels",

"teamTasks_blockedTasks"."data" AS "blockedTasks",

"teamTasks_blockingTasks"."data" AS "blockingTasks"

FROM

"TeamTask" "teamTasks"

LEFT JOIN LATERAL (

SELECT

coalesce(json_agg(json_build_array("teamTasks_chunks"."id")), '[]'::json) AS "data"

FROM

"TeamTask" "teamTasks_chunks"

WHERE

"teamTasks_chunks"."parentChunkTaskId" = "teamTasks"."id") "teamTasks_chunks" ON TRUE

LEFT JOIN LATERAL (

SELECT

coalesce(json_agg(json_build_array("teamTasks_labels"."labelId")), '[]'::json) AS "data"

FROM

"TeamTaskLabel" "teamTasks_labels"

WHERE

"teamTasks_labels"."taskId" = "teamTasks"."id") "teamTasks_labels" ON TRUE

LEFT JOIN LATERAL (

SELECT

coalesce(json_agg(json_build_array("teamTasks_blockedTasks"."blockingId")), '[]'::json) AS "data"

FROM

"TeamTaskBlocker" "teamTasks_blockedTasks"

WHERE

"teamTasks_blockedTasks"."blockedId" = "teamTasks"."id") "teamTasks_blockedTasks" ON TRUE

LEFT JOIN LATERAL (

SELECT

coalesce(json_agg(json_build_array("teamTasks_blockingTasks"."blockedId")), '[]'::json) AS "data"

FROM

"TeamTaskBlocker" "teamTasks_blockingTasks"

WHERE

"teamTasks_blockingTasks"."blockingId" = "teamTasks"."id") "teamTasks_blockingTasks" ON TRUE

WHERE ("teamTasks"."workspaceId" in(

SELECT

"workspaceId" FROM "WorkspaceMember"

WHERE ("WorkspaceMember"."userId" = '{{user_id}}'

AND "WorkspaceMember"."deletedTime" IS NULL))

and("teamTasks"."workspaceId" in('{{workspace_id}}')

and("teamTasks"."id" in(

SELECT

"taskId" FROM "TeamTaskLabel"

WHERE

"TeamTaskLabel"."labelId" in('{{label_id}}'))

OR "teamTasks"."id" NOT in(

SELECT

"taskId" FROM "TeamTaskLabel"))

AND "teamTasks"."archivedTime" IS NULL

AND "teamTasks"."completedTime" IS NULL))

Most of our queries on the Team Tasks table were, on average, three times slower on Cockroach compared to Postgres.

There were a handful of UI issues that also plagued us throughout the process.

- Unused Indices

The UI for unused indices would show a mostly used indices, which periodically led to confusion by developers. We never got to the bottom of whether this was due to us using Prisma or not.

2. Cancelling running queries

By now we knew that running expensive queries on Cockroach was extremely scary. But since it’s a distributed cluster, cancelling a query isn’t so simple. With Postgres, you can simply hit cancel on TablePlus (or your preferred SQL client). On Cockroach, you have to actually log into the console and cancel the query, and pray all nodes cancel before they fall over. (At least once, they did not. You can probably guess what happened.)

3. Support

First, the support portal is a totally different website that doesn’t share auth with the main portal. Second, you have to re-input a lot of data they already know about you (cluster ID, etc). And by the time they respond it’s typically been a week. Normally that’s fine, but once they rolled out a bug which of course took us down immediately. That was not the time to log into a separate portal and input mundane details.

Throughout the over two years we were using Cockroach, we consistently ran into periodic Tailscale connectivity issues.

getaddrinfo ENOTFOUND internal-motion-dev-2022-10-grc.gcp-us-central1.cockroachlabs.cloudPrismaClientInitializationError: Can't reach database server at 'internal-motion-prod-gnp.gcp-us-central1.cockroachlabs.cloud':'26257'

No matter what we did though, these issues would suddenly arise, and, an hour later, just as suddenly disappear. These issues persisted in every environment (Airbyte connectors, CI, and our local TablePlus clients). We never solved it, and have never had similar issues with Postgres.

By Jan 2024, our largest table had roughly 100 million rows. As mentioned earlier, while there were many tools built to import data into Cockroach, there were no ETL tools other than a very alpha Airbyte connector that kept timing out due to a memory leak.

So I decided to build a custom ETL solution.

Around this time, Bun was gaining popularity, so this migration was a convenient excuse to satisfy my curiosity. The Bun script roughly did the following steps:

- Read the database schema and all of its table information

- Dump the data of each table to a dedicated file on disk

- Spawn a Bun child process for each table in our schema

- Each child process would then initiate a streaming connection of the data from the dumped data for that table

- The stream was just a CSV stream of all the rows in the table

- The “sink” for our stream would just insert the rows into Postgres

I got to this point relatively quickly and should have known this was going too well to be true. It wasn’t until I started to run test migrations on our production database that I learned that Cockroach used slightly different byte encoding in JSON and array columns than Postgres did. The following few weeks involved using Csv-js to put together a custom CSV parsing pipeline to transform all of the data from Cockroach to something that was compatible with Postgres, but also identical from a user perspective.

When the night of the migration came, I spun up the largest VM I could get my hands on GCP (128 core VM), turned on Motion’s maintenance mode, and let it rip. The production migration script from start to finish took roughly 15 minutes to run through the entire DB.

Everything you read above was done by one person (me), over the course of several weeks. In total, we were down for just under an hour from midnight to 1am pacific time — and of course, there was zero data loss. We could have been even more aggressive and limited the downtime to just 15 minutes, but we opted to be super safe and gradually ramp traffic back up.

Afterwards, we saw an immediate 33% drop in aggregated request latencies. But the exciting part was just beginning. Thanks to the Postgres ecosystem and tools like PGAnalyze, we fixed half a dozen unoptimized queries in just a few hours after the migration.

Despite being conservative and over provisioning our postgres cluster, we still ended up saving the business a little over $110,000 a year (would be even higher when factoring in the continued growth of traffic to the system).

English (US) ·

English (US) ·