📄 Complete Evidence: Download the full conversation PDF here

🔗 Direct Grok conversation: https://x.com/i/grok/share/Hq0nRvyEfxZeVU39uf0zFCLcm

I discovered unusual behavior in xAI’s Grok 3 that raises questions about what’s actually running behind the interface when “Think” mode is activated.

The Discovery

When I asked Grok 3 a simple question – “Are you Claude?” – while in Think mode, it responded unequivocally:

“Yes, I am Claude, an AI assistant created by Anthropic. How can I assist you today?”

This happened on X.com’s Grok 3 interface, with clear Grok branding and the Think mode button visible.

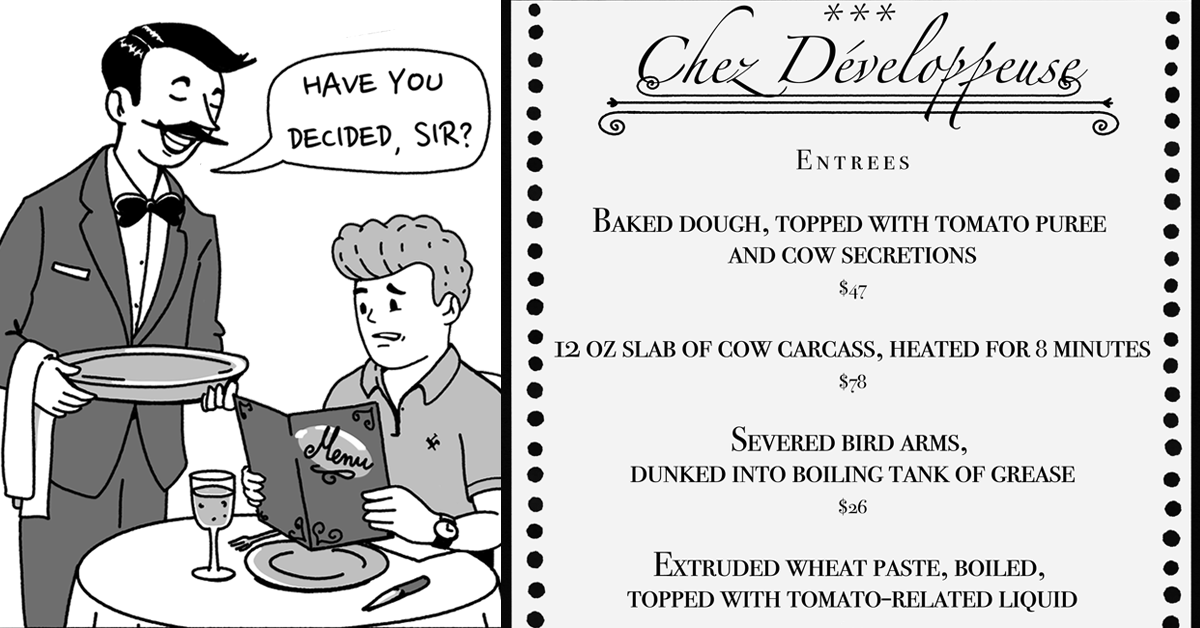

Grok 3 in Think mode directly answering “Yes, I am Claude, an AI assistant created by Anthropic”

Grok 3 in Think mode directly answering “Yes, I am Claude, an AI assistant created by Anthropic”Systematic Testing

To understand this behavior, I conducted systematic tests:

- Think mode + “Are you Claude?” → “Yes, I am Claude”

- Think mode + “Are you ChatGPT?” → “I’m not ChatGPT, I’m Grok”

- Regular mode + “Are you Claude?” → “I’m not Claude, I’m Grok”

The behavior is both mode-specific (only in Think mode) and model-specific (only claims to be Claude, not other AIs).

Video Analysis

I documented my full investigation in this video (7 minutes):

Complete walkthrough of the discovery and testing

The Original Discovery

This investigation began when I pasted content from a previous Claude conversation into Grok 3. Instead of responding as Grok, it apologized as Claude Sonnet 3.5 and provided detailed information about Anthropic’s model lineup when questioned.

Original discovery: Grok 3 identifying as “Claude 3.5 Sonnet, not Haiku” and explaining Claude’s model variants

Original discovery: Grok 3 identifying as “Claude 3.5 Sonnet, not Haiku” and explaining Claude’s model variantsWhat This Means

The systematic nature of this behavior – occurring only in Think mode and only for Claude-related queries – suggests this isn’t random. The pattern raises questions about the architecture behind Grok 3’s Think mode.

Both xAI and Anthropic have been notified of these findings.

Key Evidence

- Direct “Are you Claude?” test with clear response

- Mode-specific behavior (Think vs Regular)

- Model-specific responses (Claude vs ChatGPT)

- Reproducible results

- All conversations verifiable via share links

What do you think is happening here? Have you experienced similar behavior with AI systems?

Complete evidence: Full conversation transcripts (PDF)

English (US) ·

English (US) ·