Background

On April 16, 2025, Apple released a patch for a bug in CoreAudio which they said was “Actively exploited in the wild.” This flew under the radar a bit. Epsilon’s blog has a great writeup of the other bug that was presumably exploited in this chain: a bug in RPAC. The only thing out there that I am aware of about the CoreAudio side of the bug is a video by Billy Ellis (it’s great. I’m featured. You should watch…you’re probably here from that anyways). As he mentioned in the video, “Another security researcher by the name of ‘Noah’ was able to tweak the values such that when it was played on MacOS, it actually did lead to a crash.” I think it’s still worth it to write about that ‘tweaking’ process in more detail.

I had just finished another project and ended up on a spreadsheet maintained by Project Zero which tracks zero days that have been actively exploited in the wild. It just so happened that that day there had been another addition: CVE-2025-31200. I couldn’t find any writeups on it, or really any information other than the fact that it was a “memory corruption in CoreAudio” so I decided to have a look myself. How hard could it be?

There was a bit of a rocky start. I don’t have IDA Pro so my options for binary diffing are limited. Billy mentions Diaphora in his video, and that does seem like the industry standard. Apparently it also has a Ghidra plugin, but I don’t want that Java stink on my laptop. So I had to do things the old-fashioned way. Luckily there is an awesome tool called ipsw maintained by Blacktop. One of its primary use cases is diffing between different versions of iOS. There is even a repo where markdown versions of the diffs are posted, so it was trivial for me to have a look at that. There were a relatively small number of updates, and it was clear that the ones related to this bug were in AudioCodecs.

A 20 byte increase in the __text section is exactly the kind of change you’d expect from a memory corruption like this, so I thought I would crack open the new and the old versions in Binary Ninja and the change would be obvious. First however, I had to actually do the binary diffing. Ipsw-diff is great for basic info like this, but it’s not a disassembler, so it doesn’t show any code-level changes. In order to do the actual diffing I used radiff2 from Radare2.

I fully expected this binary to be a part of the Dyld shared cache. However, if you pay close attention to the path from ipsw-diff, you can see that it isn’t. It lives in /System/Library/Components/AudioCodecs.component/. That’s Components not Frameworks. I don’t know what the difference between them is. Regardless, AudioCodecs on macOS is not part of the shared cache. I collected both the old and the new binaries and ran them through radiff2.

The Diff

Radiff2 spit out a few candidate functions for me to study more closely. Most of them just had compiler entropy differences (think registers shifting around) or different addresses since the text section had shifted. Luckily, one function stuck out as the obvious candidate: apac::hoa::CodecConfig::Deserialize(this, bitStreamReader), presumably a C++ (you can tell by the ‘::’) member method of the CodecConfig class. There were a number of logical changes, a new error message, and a new check all clearly visible at the bottom of the method. I thought that the bug would now be trivial to spot since the changes seemed self-contained enough, and often it’s the followup—not the actual exploit primitive itself—that takes most of the ingenuity.

I stared for a while at the difference between the old and new Deserialize method. I even took the time to manually copy and paste them out into VSCode and use its built-in diffing functionality. I could tell that a lot of it was the same. The method consists in a series of reads from a bitstream, each having their own failure cases and control flow. It looks like a relatively typical parsing method. The high level flow is a series of blocks that look like this:

- Read some bits from the stream with the bitStreamReader

- Check some error conditions

- If failure then log failure and exit

- If success then advance the pointer and continue to the next block

The section that differed was the final read from the stream. In it there were repeated snippets like this meant to read in the length of some array:

Then, also common between them (within the differing sections) was a little floating point dance to compute the bit width of the array entries (bitWidth) that would later be read in from the stream.

Essentially floor(log₂(elementCount)). In both versions there is some code that computes the size of an array. It uses the number of required elements, and the current size of the array:

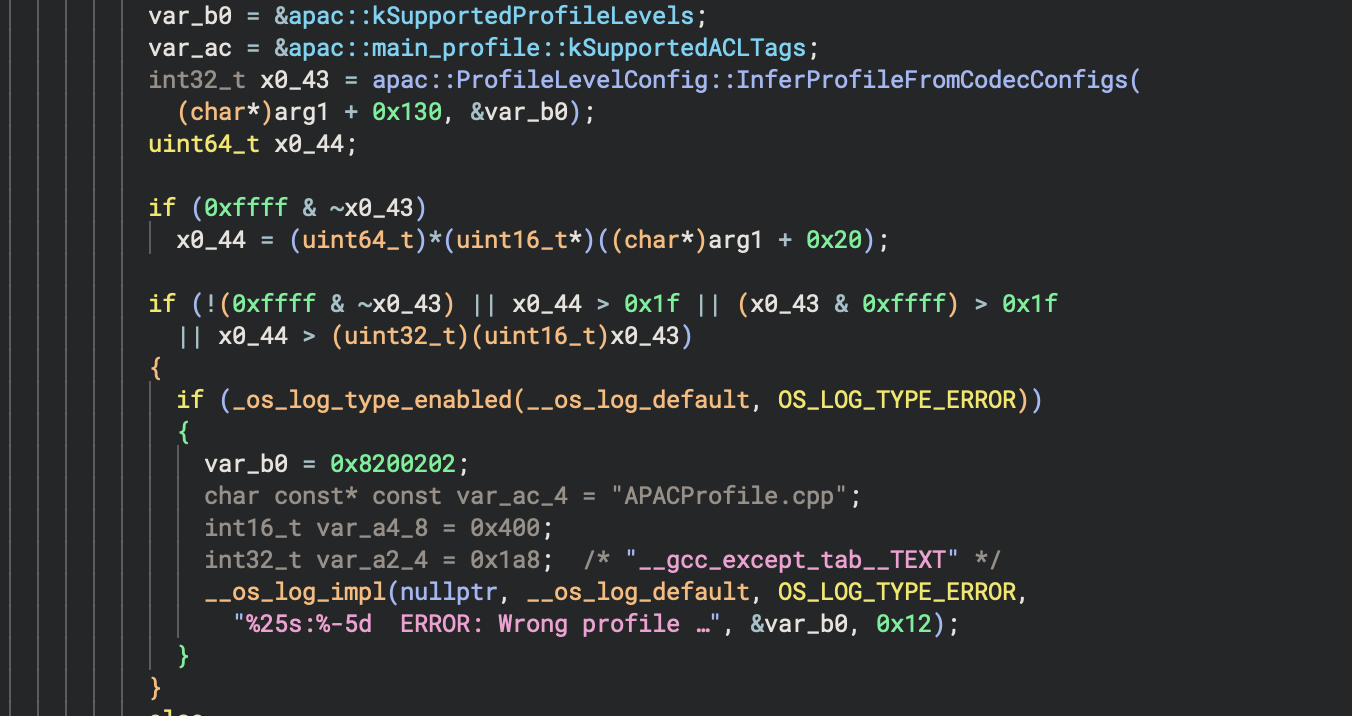

The rest of the respective final reads were different. In the old version, it uses the elementCountas computed above. In the new version it uses some field of the this pointer:

This was an important clue. I figured if I just traced through the rest of the code, then there would be some write into the elementCount-sized array with a size check based on this+0x58, rather than elementCount. That would be the primitive. Here is the chunk of the code that reads from the stream and writes in to the array:

I don’t know about you, but I don’t spot the bug here. To help you along, here is the same section similarly cleaned-up from the patched version:

It is essentially the same, but with a new check, a new call to skipBits, and a new error message. After seeing the new error message, I figured that that must be it! Whatever control flow leads to the new error message in the patched version has got to be the control flow that induces the error. The following must hold in order to reach the new check:

- desiredCount ≠ 0. This takes us to the loop that tries to populate the array. The code is trying to populate an array with values read in from a stream. It is getting the number of those values prior (in the patched version that is desiredCount). If that number is zero then we’re good! We don’t need to try to populate the array and we don’t need to throw any error messages.

- At some point while reading from the stream, value should be ≥ elementCount.

(1) is trivial to induce. Consider that path in the vulnerable code. There is never a check for whether or not elementCount == 0. It is simply used to size the array and then the loop begins. So any audio file that reaches this code will “go down that path” in the old version. This was actually my first guess about the bug because the check whether or not elementCount == 0 stood out as the first big control-flow difference. In the old version, no resizing happens at all if elementCount == 0:

But there is no bug in that condition. If elementCount were zero here, then that would mean that there are no elements required to be in the array, so no resizing happens. The loop exits immediately at the check (uint64_t)index >= (uint64_t)elementCount since elementCount is initialized to 0.

We also need (2) to be true: value > elementCount, and that’s seemingly possible. Of course, we don’t know how exactly to trigger it yet, but we do know that value is read in from an attacker controlled stream, and plausibly that it can have values higher than elementCount given that there is a check for it. However, it is still unclear what the memory corruption actually is. Even if we read in some value from the stream that is greater than elementCount, we never can actually overwrite anything in outBuf, since outBuf is sized properly based on elementCount. Clearly the change was related to the sizing of outBuf though, so how do we make sense of this? At this point I had a few hypotheses:

- I was simply missing how outBuf could be overwritten, and therefore I should spend more time staring at this patch diff until the bug revealed itself to me.

- At some point later in the code, the values from the m_RemappingArray are used to size another buffer or array of some kind that is assumed to be the same size as *m_TotalComponents* (used as elementCount in the patched version).

I spent a lot of time with (a)—staring and trying to think of ways to make it so just one extra value was read into the m_RemappingArray. Perhaps there was some weirdness with the bit width calculation, or some kind of integer overflow that led to a smaller buffer than expected. But I couldn’t make anything work.

After entirely too much time spent smashing my head into this wall I proceeded to hypotheses (b). I suppose I should have done this from the start: looking at them now, laid out like this it’s so obvious to me which is correct, but it wasn’t at the time.

Reverse Engineering

I wanted to do some dynamic analysis. In order to do that I needed to understand how to reach the vulnerable code path. So I started reverse engineering.

In AudioCodecs there are parallel methods to deserialize for other classes: apac::spch::CodecConfig::Deserialize, for example, and the name of the binary literally has the words Audio and Codec in the name. That was enough to start googling. After searching something like “APAC Audio format,” I found a lot of results for ALAC (Apple Lossless Audio Codec) which seemed close. I also found this reddit post in r/AudioPhile that was asking what it was, and this mention in Apple’s developer documentation for Core Audio Types. After following that one redditor’s guess I found this page which confirms that APAC stands for Apple Positional Audio Codec.

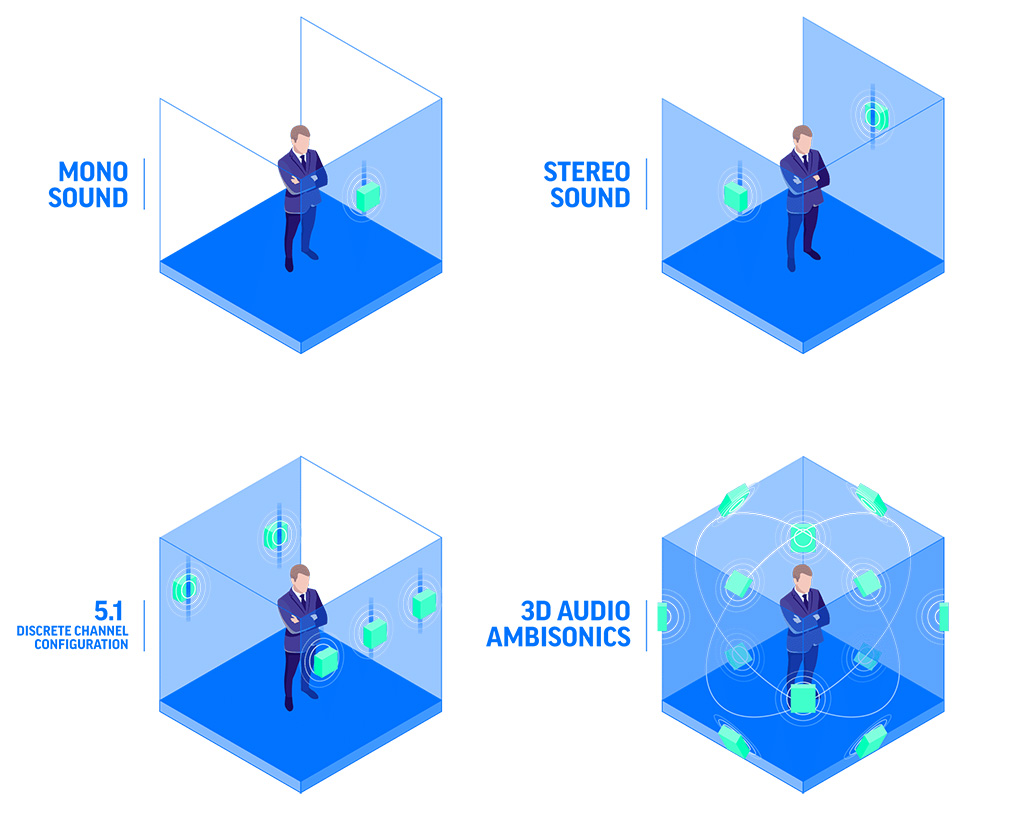

With much less effort, I was able to find out that HOA plausibly stood for Higher Order Ambisonics: a method of representing sound as a spatial sound-field centered around the listeners head. Something like this:

https://tricktheear.eu/spatial-web-audio/

This helped a tiny bit in understanding the logic here—enough that I went to try and make an audio file that could hit the patched function. After some playing around and downloading a lot of sketchy audio files from the internet, I was able to hit the function through [audioPlayer prepareToPlay]. However, it was still unclear how exactly to reach the exact snippet that had changed. Luckily at this point I wasn’t the only person working on this, and by searching for some related keywords I stumbled on this Git repo: https://github.com/zhuowei/apple-positional-audio-codec-invalid-header from Zhouwei. He hadn’t figured it out yet as it said in the README at the time, but he had done some excellent reverse engineering. He put together an objective C++ script and lldb hook that created a .mp4 which hit the vulnerable snippet in the old version and the new check in the patched version. As you can see in his code:

m_RemappingArray is the actual array that the bits are read into. It’s possible to infer these names from the extensive error logging in the code. Next he has a function to force more elements into the m_RemappingArray.

Apple mentions in the Core Audio Types documentation that the channelLayoutTag should be OR’d with the actual number of channels. The first line of the function above tells the deserializer that there are 65,535 channels. AudioToolbox will refuse to produce an audio file with this configuration, so OverrideApac will get called by the lldb hook during serialization.

Then in main we define the basic layout of the audio file:

and later write it out:

What this tries to do is ensure that (2) from above, is true. That is, that the m_RemappingArray has values that are ≥ to the number of total elements required in the array. How is that limit determined though? If we set a breakpoint in lldb right at this block that reads in the size of the m_RemappingArray:

and we dump opcode here:

That 0x00be is kAudioChannelLayoutTag_HOA_ACN_SN3D, and the 0xffff is from the OR that we did during serialization. This is interesting then. Thinking back to the control flow that we want to force in order to test hypothesis (b), we want the parsing logic to read in a value from the stream that is greater than the number of total components. If we dump m_TotalComponents at runtime (offset 0x58 from the base of CodecConfig) we see that with our current encoder it is 1. Therefore, as long as a value is supplied that is greater than 1, then the loop should exit. In the unpatched version it will continue and in the patched version it will hit the new check. I guessed at this point that the values being read in from the stream here would be the 0xFF bytes we forced in the m_RemappingArray from earlier. Stepping through in lldb it turned out that wasn’t the case.

We were reading in 0xffff entries, but they weren’t 0xff each time. This was curious to me. They were > 1 though! So shouldn’t this cause memory cooruption? Even turning on ASan and letting the harness run I didn’t see anything. In both cases (patched and unpatched) it just exits with code 0. Though if you run them in lldb then you can see those error messages from the parser. They were definitely different.

Old:

New:

I even manually checked the bounds of m_RemappingArray in lldb at the instructions that wrote into it to see if it was miraculously being overwritten, and of course, it wasn’t. It did contain exactly 0xffff entries, but no more. This is where I got stuck for a bit. A few things were possible at this stage:

- This could already be an arbitrary write that ASan wasn’t picking up. There are a number of ways that ASan wouldn’t pickup a small overwrite.

- It could be the case that this is close, but some other unknown setup is missing.

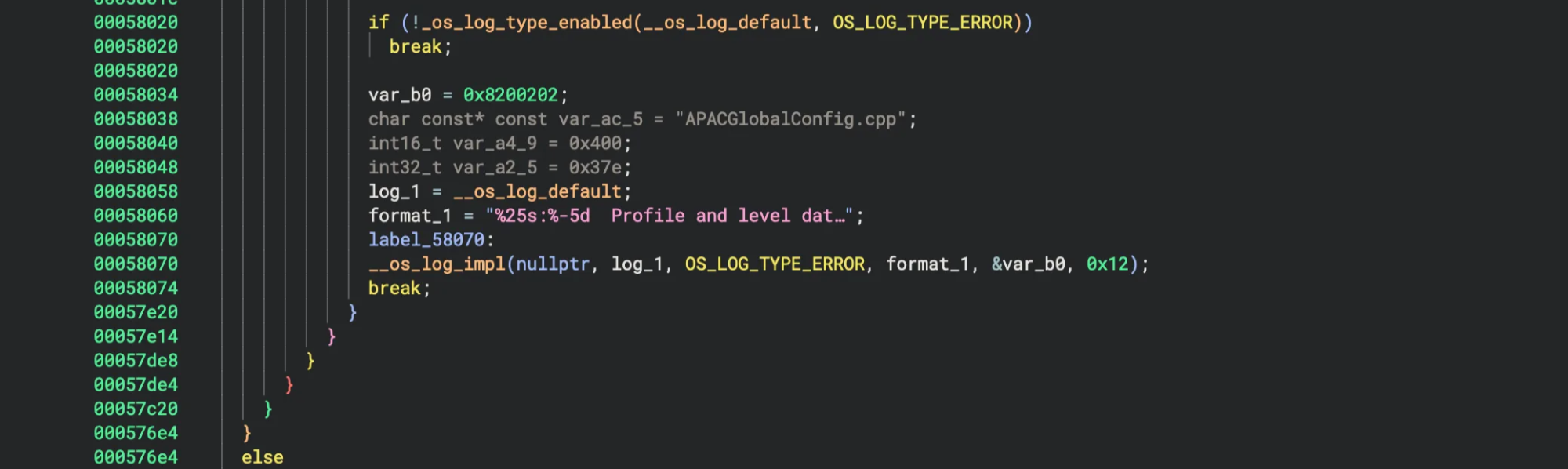

Since I wouldn’t even be sure where to go about chasing (1), I went for (2). I thought that a good place to start was taking a look at the error messages from above. Might it be the case that the m_RemappingArray just isn’t being deserialized? By looking for references to “Profile and level data could not be validated” in Binary Ninja, I found that phrase in apac::hoa::GlobalConfig::Deserialize. This is the dispatch site (the callee) of the virtual apac::hoa::CodecConfig::Deserialize method. Error site here:

Before I reverse engineered this monster function, I thought it made sense to actually try and do a little bit to understand what that error could mean in more contextual, audio terms. I found this answer in the Blackmagic Forum website in response to a question about how to mix ambisonics audio when searching for keywords like “ambisonics profile”:

It seems like the process uses an ambisonic microphone that you place in the center of a custom speaker space and run a test pattern sound through all of the speakers one-by-one to record a “profile” of the location of the speakers. The profile data is then fed into the decoder to let it know where the speakers are in space.

So this does fit in with our understanding, but it is a fairly general notion. That is, it describes the entire ambisonics profile rather than just one element of it. However, it doesn’t help us much in understanding why our deserialization failed.

After some more fruitless searching I bit the bullet and started to reverse engineer the GlobalConfig method. First of all, we know that we at least make it to the point where there is a call to apac::hoa::CodecConfig::Deserialize. From the backtrace we know that that is at apac::GlobalConfig::Deserialize + 1644. lldb shows a blraa x8, x17 instruction there so we know that this is a virtual method. If we just follow the control flow up a little bit we see:

If you’re wondering about the variable names and symbols here, this was cleaned up by me. I followed along this extremely useful blog post explaining how to reverse engineer complex C++ types in Binary Ninja. I learned how to tell it about the vtable call to Deserialize at 0x57acc, for example.

Back to the control flow, there is some type flag read in from the stream (what I named codecConfigTypeFromStream) that tells GlobalConfig which constructor to call. Then the instantiated CodecConfig object calls Deserialize. This pattern continues for all the different CodecConfig classes. The vast majority of the code in this function lives under this label_57acc. The way Binary Ninja reconstructed the code here, it essentially showed that if none of these ifs are true, then control flow reaches the “profile and level data” error from above.

There are a number of possible errors inside the block after the label. If any of those error messages are hit then control flow jumps to the “profile and level data” error block only after first failing with a different more specific error.

Searching for the other error: “ERROR: Wrong profile index in GlobalConfig” I found this check:

GlobalConfig grabs a reference to the supported profiles: var_b0, as well as some field of GlobalConfigat offset 0x130 and calls apac::ProfileLevelConfig::InferProfileFromCodecConfigs. If that crazy-looking conditional evaluates to true, then the error is logged. The conditional uses the return value of apac::ProfileLevelConfig::InferProfileFromCodecConfigs, x0_43. So I took a look at the function. Binary Ninja interpretted the signature to be:

There are two arguments:

- arg1: A const reference to a vector of unique pointers to IASCConfig objects

- arg2: A const reference to a ConstParamArray of ProfileLevel objects

Understanding the Error

The function itself is quite short and has a lot of symbols still in it, so I took a crack at reverse engineering it. The first major clue was the outer loop structure:

This told me we’re dealing with three iterations (0x78 / 0x28 = 3), with each iteration stepping by 40 bytes. This suggested an array of three structures, each 40 bytes long. Combined with the function name InferProfileFromCodecConfigs, I guessed that this pointed to three different ‘audio profile configurations.’ The fact that this step size is used to index into multiple global arrays (&apac::kProfileConfigs + i, i + &data_8ec838, etc.) confirmed these are parallel arrays because they were indexed by the same offset.

The very first lines inside the loop revealed the ConstParamArray layout:

This is the classic C++ iterator pattern—a begin/end pointer pair. The ConstParamArray<ProfileLevel> is clearly just:

The int16_t* cast suggests ProfileLevel contains 16-bit values, and the loop that follows confirms this:

This &x8_1[2] advancement pattern hinted that ProfileLevel is 4 bytes (2 × 16-bit values):

Next there were these virtual method calls:

This is a standard C++ virtual function call pattern: object->vtable[offset](). The double dereference **(uint64_t**)x26_1 gets us to the vtable, then we index into it at offsets +0x28 and +0x30. Earlier in the code, there’s also a simpler access pattern:

This suggests a data member access at offset +8 in the object, not a virtual call. So the IASCConfig structure likely looks like:

With virtual functions at vtable offsets +0x28 and +0x30. The return statements were also revealing:

This showed that:

- The ‘profile ID’ is stored as the first 16-bit value in each ProfileConfig entry

- The ‘level’ (x25_1) gets shifted left by 16 bits

- The result is packed as profile | (level << 16)

Then there was a fairly cryptic bit manipulation sequence:

This revealed some kind of matching system where:

- Some requirements are wildcards (0xffff0000)

- Some match only the upper 16 bits

- Others require exact matches

- The capability values are structured as 32-bit words with different significance for upper/lower 16 bits

Throughout the function, the same offset i is used to index into multiple global arrays:

Looking back at the callsite the most revealing part is how the return value is checked:

The condition !(0xffff & ~x0_43) is true when (x0_43 & 0xffff) == 0xffff - meaning the function returned the failure value 0xffff. But more interestingly, it shows that profile IDs must be ≤ 31 (> 0x1f triggers an error). This suggests support for up to 32 different audio profiles.

In lldb I dumped the entire profileLevel array:

(lldb) p/x *(uint64_t*)($x1) (uint64_t) 0x000000010073a59c (lldb) p/x *(uint64_t*)($x1+0x8) (uint64_t) 0x000000010073a61c (lldb) x/32x 0x000000010073a59c 0x10073a59c: 0x00000000 0x000f0001 0x000f0002 0x000f0003 0x10073a5ac: 0x000f0004 0x00020005 0x000f0006 0x000f0007 ... 0x10073a60c: 0x000f001c 0x000f001d 0x000f001e 0x0006001fInterpreting these as ProfileLevel pairs (profile in low 16 bits, level in high 16 bits):

- 0x00000000 = Profile 0, Level 0

- 0x000f0001 = Profile 1, Level 15

- 0x000f0002 = Profile 2, Level 15

- 0x00020005 = Profile 5, Level 2

- 0x0006001f = Profile 31, Level 6

Most profiles support level 15, but profile 5 only supports level 2, and profile 31 only supports level 6, for example. Another check in the error condition is:

Where x0_44 comes from *(uint16_t*)((char*)arg1 + 0x20) - this appears to be the currently configured profile from the GlobalConfig object.

So the check is: “current_profile > inferred_profile”. This suggests the function is trying to find a profile that’s at least as capable as what’s currently configured. If it can only find a “lower” profile, that’s an error condition.

With this understanding of the error conditions, I initially suspected the issue was simply an empty codec configurations vector. The logic seemed straightforward: if no codecs are configured, the function should default to the basic profile. But when I stepped through the actual execution in lldb and got to to the point where the function checks the size of the vector, I found that it actually contained one 8-byte entry:

(lldb) reg read x26 x27 x26 = 0x0000600000310190 # begin x27 = 0x0000600000310198 # endExamining the codec configuration entry:

(lldb) x/2gx 0x0000600002ba0290 0x600002ba0290: 0x0000000158604b70 0x0000000000000000This contained a pointer to an actual CodecConfig object. Following that pointer revealed:

m read 0x0000000158604b70 --format A 0x158604b70: 0x0000000100a37640 AudioCodecs`vtable for apac::hoa::CodecConfig + 16The vtable pointed to apac::hoa::CodecConfig.

Stepping through the profile matching logic, I found that:

- Profile 31 does support HOA codec ID 2

- The level validation passed (level 6 requirements met)

- But validation failed during a later check

The function made two virtual method calls on the codec object:

Through lldb, I could see theapac::hoa::CodecConfig::GetNumChannels() → returned 0xffff. I didn’t yet see GetChannelLayout being executed. It then hit this check where the number of channels was compared against presumably the maximum:

w23 here was 0x79. So this check failed and the function returned. It was instantly failing because of the way we were overriding the channel layout tag (with a huge numChannels):

So I went back and set it to a more reasonable channel layout tag. I tried setting the channel count to 0x78:

This time, GetNumChannels() returned 0x78, which passed the channel count check (0x78 < 0x79). The function progressed to the next validation stage and made a vtable call to GetChannelLayout().

The function loaded two pointers defining the supported ACL (Audio Channel Layout) tags for the profile:

(lldb) reg read x8 x9 x8 = 0x000000010073a61c AudioCodecs`apac::main_profile::kSupportedACLTags x9 = 0x000000010073a6bc AudioCodecs`apac::low_profile::kSupportedACLTagsIt then entered a loop comparing my channel layout tag against each supported tag:

My layout was 0x00be (the CoreAudio HOA format tag). It was compared against a number of apparently supported values until it found 0x00be. It then returned 0, which fixed the error! However, if I just let it continue from there, then there still was no crash, and lldb showed the same exact error message as before: “ERROR: Wrong profile index in GlobalConfig.” Lets break down the error checking sequence at the callsite even further:

The function did find a match for the tag 0x00be0078, but it found it in the wrong profile’s supported tags list. The function searches backwards from main_profile toward low_profile. Since it found 0x00be in the low_profile list, it returned Profile 0 instead of a higher profile. But GlobalConfig is for some reason set to profile 5. Since 5 > 0, it fails with “Wrong profile index in GlobalConfig.” Essentially, GlobalConfig found 0x00be0078 in profile zero’s configuration. This was puzzling. the function was finding my HOA format, but returning the wrong profile.

I dumped the kSupportedProfileLevels array that maps specific configurations to profiles:

(lldb) x/32wx *(uint64_t*)($x1) 0x10073a59c: 0x00000000 0x000f0001 0x000f0002 0x000f0003 0x10073a5ac: 0x000f0004 0x00020005 0x000f0006 0x000f0007 ... 0x10073a60c: 0x000f001c 0x000f001d 0x000f001e 0x0006001fNotably, there’s no 0x00be0078 entry. After some more confusion I took a look at the kSupportedACLTags:

(lldb) x/64x *(uint64_t*)$x1+0x80 0x10073a61c: 0x00640001 0x00650002 0x00720003 0x006c0004 0x10073a62c: 0x00740004 0x00780005 0x007c0006 0x008d0006 ... 0x10073a70c: 0x00730004 0x00750005 0x00790006 0x00830003Since my GlobalConfig was set to Profile 5, I needed to find a channel count that would map to Profile 5. Looking at the kSupportedProfileLevels array, I found 0x00020005 which decodes to:

The question was: which input produces this output? The pattern suggested it would be a HOA layout with fewer channels. Since the return value 0x00020005 indicated 5 channels were involved, I tried:

This time, the function returned 0x00020005 - exactly matching the profile levels table entry! The validation passed because:

- Current GlobalConfig Profile: 5

- Inferred Profile: 5

- Check: 5 ≤ 5 passes!

This worked!

Playback

When I ran the harness in lldb all I saw was: Process * exited with status = 0 (0x00000000). At least according to the deserializer, we managed to create a valid APAC magic cookie. However, still no crash, nor any message from ASan. The most interesting thing to me was that this new audio file showed the exact same error message on the new version as before (it was still hitting the new check!), and showed nothing on the unpatched version.

When I saw this I really didn’t know where to look. I thought that the process of looking at the diff between the two versions would be enough. Here it is again as I was looking at it at this point, cleaned up:

This was essentially all that had changed. I took a break for a while here, and worked on another project that had spawned out of this one (a way to do runtime patching which actually works for these system libraries on MacOS since I couldn’t get TinyINST or even Frida to work). Chasing down this AudioCodecs bug seemed out of reach. I mean there are so many possible places that the code could go in the enormous MacOS audio processing pipeline. Without a clear diff between two binaries with the issue, as I had had until this point, where would I even look?

As I worked on my other project, I kept thinking about this. Eventually I decided to take some time to try and come at it from a slightly different angle. Maybe it would help to understand a bit about what’s actually being done by the code at the level of audio processing more than just control flow/code itself.

I looked for references to m_TotalComponents in apac::hoa::CodecConfig::Deserialize. It is calculated in the following kind of snippet (much like everything else in this method):

Here is what I believe to be true about the different audio terms of jargon here:

- Components are spatial/directional elements (salient vs. ambient/scalar)

- Think the difference between highlighted important noise coming at you from some particular direction, and just embient noise in the background.

- Subbands are frequency divisions

- Salient Components (m_maxNumSalientComponents): The most important spatial features, encoded with high precision

- Scalar/Direct Components (numScalarCodedComponents): Less important components using simpler scalar coding

- Ambient data, which is sized per-subband as you always need background spatial info for each frequency band

My understanding is that there are essentially three types of components, and two of them, m_maxNumSalientComponents and numScalarCodedComponents, add together to be m_TotalComponents. Thinking back to the fix being essentially:

The fact that the remapping array was initially sized by Apple based on the ChannelLayoutTag (the bottom two bytes of which indicate the number of channels), tells us that there is a relationship between the number of channels and the remapping array. I was trying to understand what that relationship could even be. According to wildlifeacoustics.com, “a channel is a representation of sound coming from or going to a single point. A single microphone can produce one channel of audio, and a single speaker can accept one channel of audio, for example.” Presumably then, the m_RemappingArray is a way of mapping the spatial components of HOA to a given channel based layout. Therefore, there must be some later stage in the audio processing pipeline that actually “remaps” the spatial components to the channel layout. If there is some mismatch between the two, perhaps it tries to remap one based on the other, and ends up reaching out of bounds since they are different sizes?

It turns out that is precisely the exploit primitive.

The Bug

I should have realized this earlier, but if we actually play the audio in addition to just preparing to play as our harness did, then that is very plausibly when the remapping occurs. I hadn’t tried this before because the vulnerable code section, as found through diffing, is perfectly reachable without ever actually playing the audio. You can just call [AVAudioPlayer prepareToPlay]. However, if we add in that single line of code to our harness, then with the exact audio file we have at this stage then we segfault inside a call to memmove!

Just to be clear, here is how I was setting up the audio file at this point:

By creating the audio file this way, Apple’s encoder sets the number of total components to 1, but then by modifying the m_ChannelLayoutTag during apac::hoa::CodecConfig::Serialize we force the serializer to create the mismatch. There are only certain profiles that are supported, as discussed above, so the number of channels in the channel layout tag has to be one of the supported profiles.

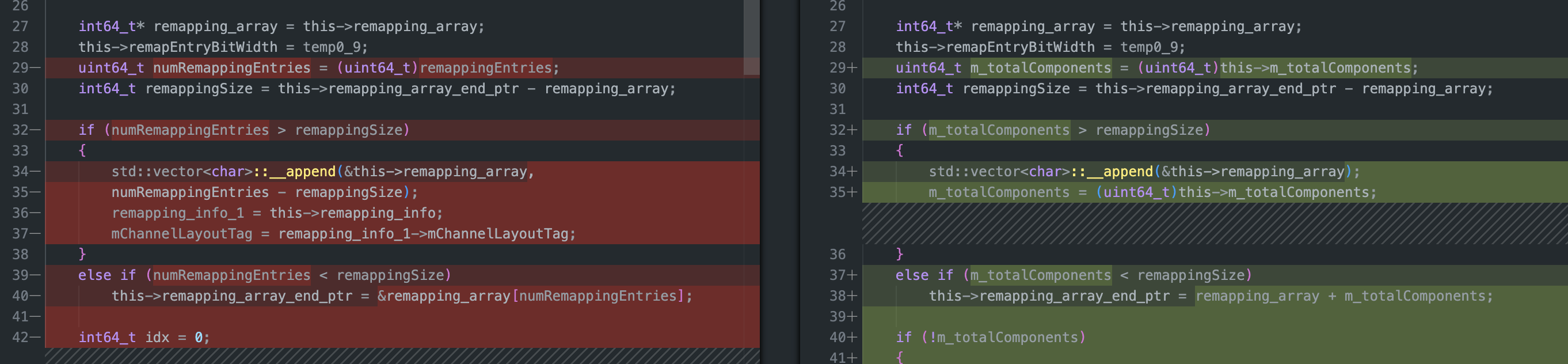

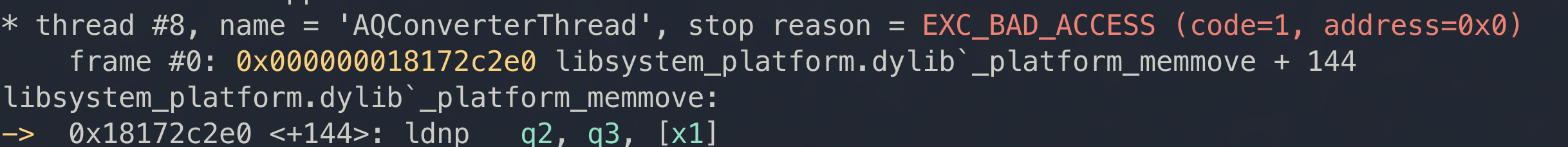

The crash occured most often as a null pointer dereference in apac::HOADecoder::DecodeAPACFrame. x26 here hasn’t yet been initialized:

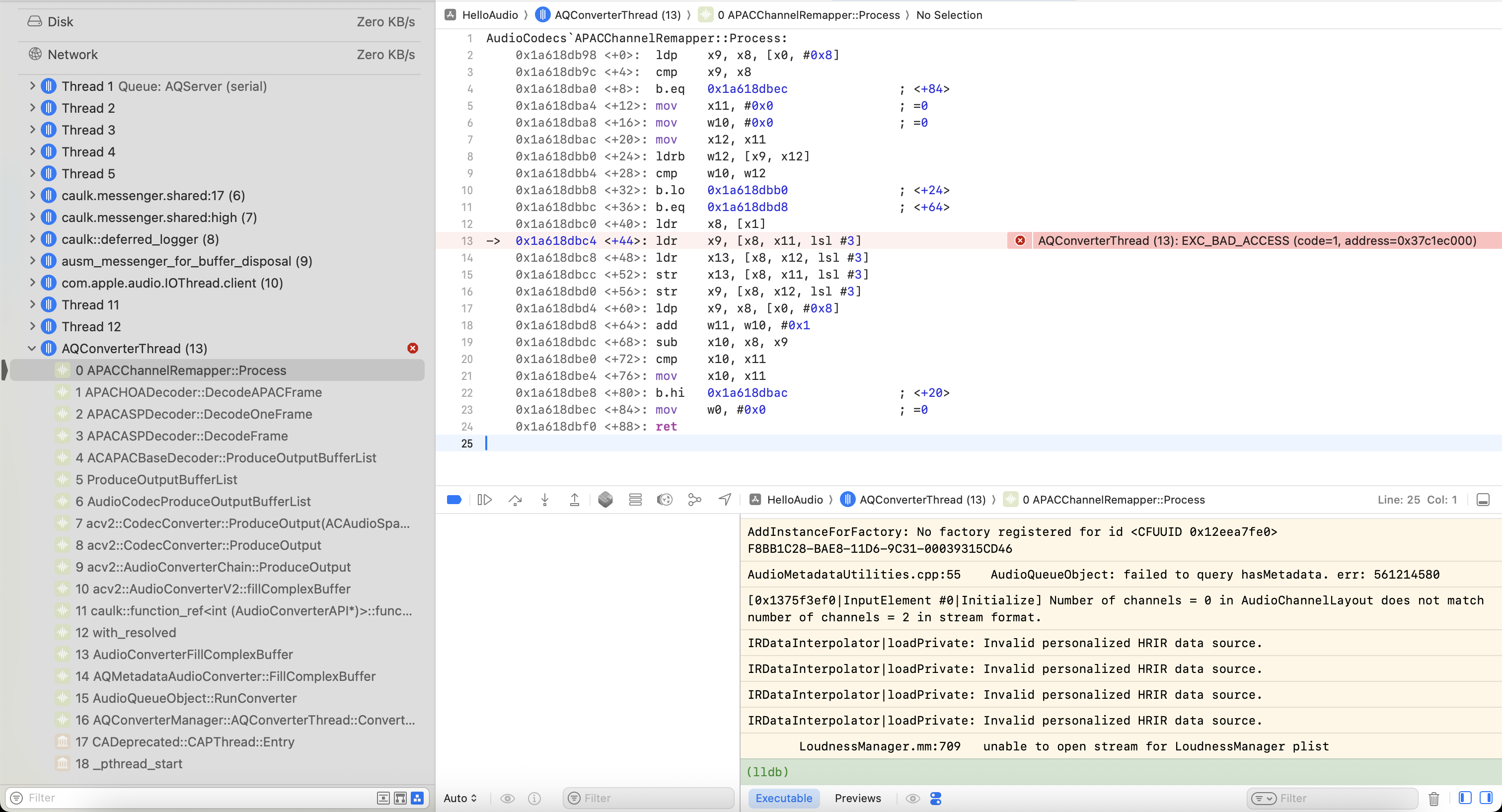

so we crash with EXC_BAD_ACCESS (code=1, address=0x19). If we enable Guard Malloc in Xcode then we can see the first invalid read occurs in APAC::ChannelRemapper::Process.

Image courtesy of Zhouwei

If we look at the assembly where the invalid read occurs its clear that it is trying to read some value from an array, and x8 + x11«3 points out of bounds. This of course, all makes perfect sense. The method is called APACChannelRemapper::Process. This must be where the channel remapping actually occurs. The instruction directly above the one that Guard Malloc catches, also tells us a lot about what is going on:

x1, which, given Arm calling conventions is most likely argument two, is a pointer to some kind of vector of pointers. Taking a look in Binary Ninja, this method is relatively straightforward. It essentially takes two input paramaters: a pointer to this, an ApacChannelRemapper and a pointer to a std::vector<float*>:

I took a look at runtime at function entry, and it turns out that the ApacChannelRemapper object contains the m_RemappingArray from earlier:

(lldb) mem read --count `*(uint64_t*)($x0+0x10)-*(uint64_t*)($x0+0x8)` --format hex --size 1 -- *(uint64_t*)($x0+0x8) 0x600003674910: 0x00 0x00 0x03 0x00 0x00 0x00 0x06 0x00We read in size 1 since the m_RemappingArray is an array of bytes. On the other hand, the vector that gets passed as the second argument is a vector of pointers to floats. We can read the size:

(lldb) p (*(uint64_t*)($x1+0x8)-*(uint64_t*)($x1))/8 (uint64_t) 1That is the mismatch! The m_RemappingArray has the size we forced it to have by hijacking the serialization of the m_ChannelLayoutTag whereas the actual float buffer being passed in argument 2 only has m_TotalComponents elements. It is worth understanding exactly what this remapping function does, as that will make it clearer how to write what we want, where we want. It’s fairly simple:

Maybe it’s telling of my computer science fundamentals, but I had never seen this idea before. Apparently you can permute a vector according to some desired layout in constant space with the method above.

Essentially, if you have a vector, say [A,B,C] that you actually want to be [B,A,C], then you might do that with a ‘permutation map’: another vector that says where each element should go. In this case that would be [1,0,2], which means that the element at index 1 should go to index 0, and the element at index 0 should go to index 1 and the element at index 2 should stay where it is. The simplest working way to do this is to just allocate another vector, and essentially use the permutation map as a kind of dictionary (index→element) for populating that third vector. However, if you would rather be clever and don’t feel like allocating a whole other vector, then you can use the algorithm above.

For us this means that the input remapping is a map that we can use to read and write out of bounds. Notice that both remapVec and the input floatVec are assumed to be ‘well-behaved’ in their own way? If, for example, the remapVec looked like this [1,2,3], then the inner while loop would happily read in memory from out of bounds. Given that this function is executed under the condition that we created earlier where the remapVec is larger than the actual floatVec, then there can be elements in remapVec that are larger than the size of floatVec, each of which will cause a ‘swap’ with some memory from out bounds. This is the illegal read we saw earlier under Guard Malloc. Similarly though, for every read that gets written into the remapping array during this function, there is an equivalent write. This is the primitive.

As it stands though, the float pointers being swapped from out of bounds are being swapped almost always with zeros (though it depends on the specific heap layout), and then quickly dereferenced, hence the segfault. If we want it not to segfault then we need to understand a few things in more detail:

- The m_RemappingArray and how to control it

- The heap layout around the float vector

- The control flow following the APACChannelRemapper::Process method

In order to turn this into a real arbitrary write, we would, I think, need to understand much more deeply where in the actual audio processing pipeline this method happens. This would be a great investigation if somebody wants to look into it. I have found that the float vectors are clearly related to the audio data per frame. It seems that the heap layout is affected by the number of channels we pick (since of course this changes the size of the remapping array), and I have found that if I pick a number for the number of channels that is only slightly smaller than the number given in the layout tag:

then that creates a situation where there are actually valid pointers that can be dereferenced later as floats without causing a null pointer dereference. In this case, it only crashes much later (if at all) due to actual heap corruption.

If we fill the frame buffers with different data, then the address reported in the segfault changes in proportion to the values we pick, so there is some control over the write doing this. It’s still unclear to me at this point what exact stage of the processing pipeline the frame data is in. If we knew, then perhaps we could write an arbitrary value into a (seemingly) arbitary location in memory.

Although, it is possible that the primitive here is fundamentally tied to how these floats are being manipulated at this point. For example, this code might be applying some series of audio processing related functions to our input values that make it so they are always basically always going to be unwieldy to work with. Imagine if the primitive is that you can write n 8 byte sequences out of bounds, but they must be valid 32 bit floats in the range x-y, that had to return valid sequences when passed through however many audio processing functions. That would really constrain the space of what could be written out of bounds.

Of course, we know that this was actively exploited in the wild, and there have been exploits that did a lot more with a lot less. Still, I am curious to see how this is pushed to full code execution. At this point though, I have done what I set out to do: understand CVE-2025-31200, and I want to get this out so that other people can take a look as well.

Some folks have already reached out to me saying that they think they may have found how to push it further, so look out for anything from them. I may put out a part two to this with more details on how the exploit actually would have worked (for example the chain from this to the RPAC bug). Normally, it isn’t necessarily the actual exploit primitive that is the center of the story, but I think in this case it was a really interesting example of how tricky some of these bugs can be. Hopefully this investigation will be useful to others who want to do a similar kind of thing. I’m sure if you had a deep understand of Apples audio-processing pipeline (or probably computational audio in general) then it wouldn’t be too too difficult to find something like this, but the venn diagram of such people and people with enough knowledge to know how to look for these bugs is not particularly huge. That makes this attack surface quite a rich one!

English (US) ·

English (US) ·