Rust is fast at runtime — but not so much at compile time. That’s hardly news to anyone who's worked on a serious Rust codebase. There's a whole genre of blog posts dedicated to shaving seconds off cargo build.

At Feldera, we let users write SQL to define tables and views. Under the hood, we compile that SQL into Rust code — which is then compiled with rustc to a single binary that incrementally maintains all views as new data streams into tables.

We’ve already pulled a lot of tricks in the past to speed up compilation: type erasure, aggressive code deduplication, limiting codegen lines. And that got us quite far. However, recently we started on-boarding a new, large enterprise client with fairly complicated SQL. They wrote many, very large programs with Feldera. For example, one of them was 8562 lines of SQL code that eventually is translated to ~100k lines of Rust code by the Feldera SQL-to-Rust compiler.

To be clear, this isn’t some massive monolith we’re compiling. We’re talking about ~100k lines of generated Rust. That’s peanuts compared to something like the Linux kernel — 40 million lines (which manages to compile in a few minutes).

And yet… this one program was taking around 25 minutes to compile on my machine. Worse, on our customer's setup it took about 45 minutes. And this was after we already switched the code that is generated to using dynamic dispatch and pretty much eliminated all monomorphization.

Here's the log from the Feldera manager:

Almost all the time is spent compiling Rust. The SQL-to-Rust translation takes about 1m40s. Even worse, the Rust build is doing the equivalent of a release build in cargo, so it happens from scratch every time (except for cargo crate dependencies which are already cached/re-used in the times we give here). Even the tiniest change in the input SQL kicks off a full rebuild of that giant program.

Of course, we tried debug builds too. Those cut the time down to ~5 minutes — but they’re not usable in practice. Our customers care about actual runtime performance: when the SQL code type-checks, they already know the Rust code will compile successfully and they're running real-time data pipelines and want to see end-to-end latency and throughput. Debug builds are just too slow and misleading for that.

What's happening?

Here’s the frustrating part.

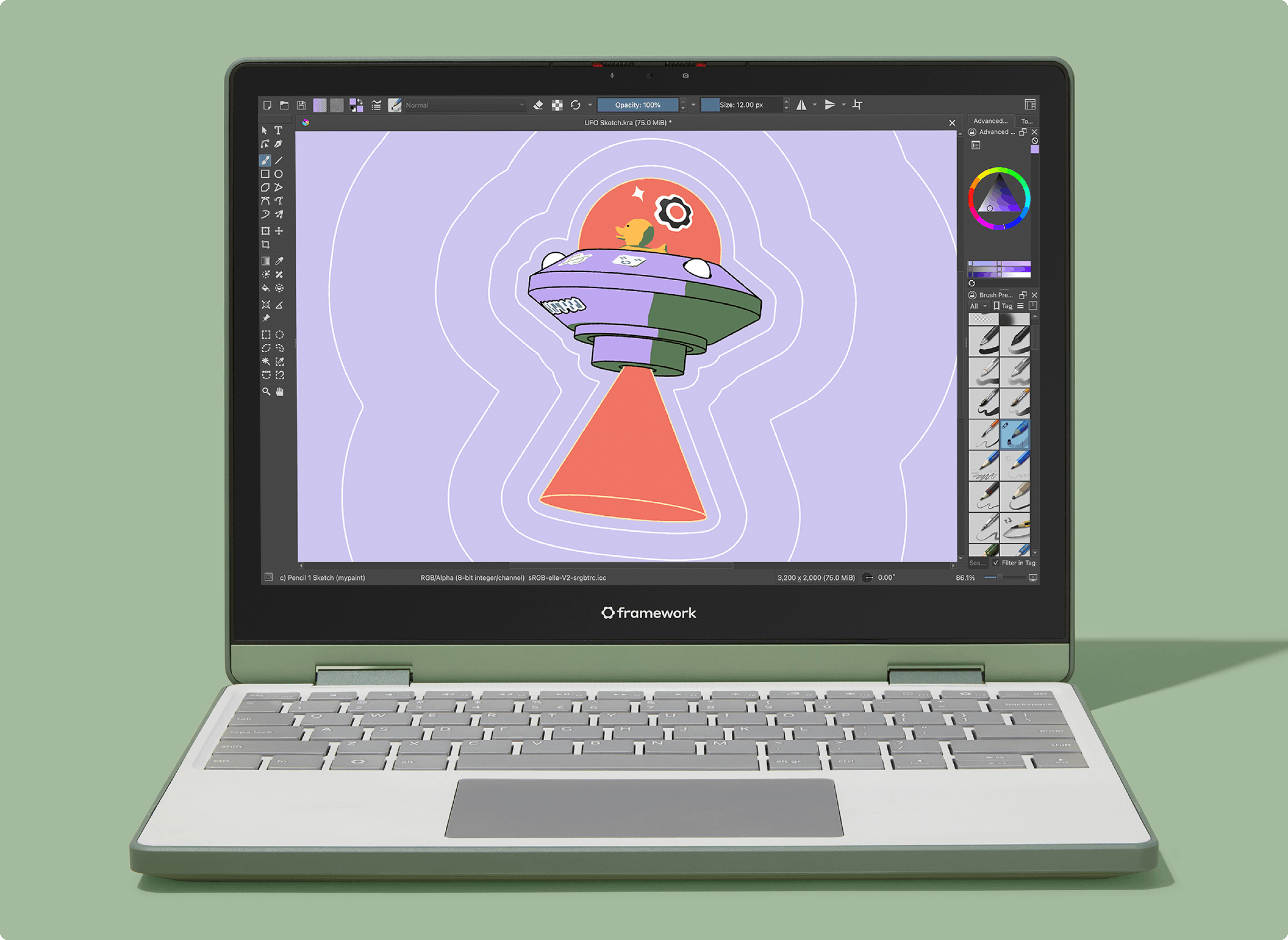

We're using rustc v1.83, and despite having a 64-core machine with 128 threads, Rust barely puts any of them to work. This becomes evident quickly when looking at htop during the compliation:

That’s right. One core at 100%, and the rest are asleep.

We can instrument the compilation of this crate by passing -Ztime-passes to RUSTFLAGS

(this requires recompilation with nightly). It reveals that the majority of time is spent in LLVM passes and codegen – which unfortunately are single-threaded:

Sometimes during these 30 minutes, Rust will spin up a few threads — maybe 3 or 4 — but it never fully utilizes the machine. Not even close.

I get it: parallelizing compilation is hard. But this isn’t some edge-case, looking at it ourselves we clearly saw enough opportunities to parallelize compilation in this program.

Note aside: You might wonder what about increasing codegen-units in Cargo.toml? Wouldn't that speed up these passes? In our experience, it didn't matter: It was set to the default of 16 for reported times, but we also tried values like 256 with the default LTO configuration (thin local LTO). That was somewhat confusing (as a non rustc expert). I'd love to read an explanation for this.

What can we do about it?

Instead of emitting one giant crate containing everything, we tweaked our SQL-to-Rust compiler to split the output into many smaller crates. Each one encapsulating just a portion of the logic, neatly depending on each other, with a single top-level main crate pulling them all in.

The results were spectacular. Here's the same htop view after the change during compilation:

Beautiful. All the CPUs are now fully utilized all the time.

And it shows: The time to compile the rust program is down to 2m10s!

How did we fix it?

In most Rust projects, splitting logic across dozens (or hundreds) of crates is impractical at best, a nightmare at worst. But in our case, it was surprisingly straightforward — thanks to how Feldera works under the hood.

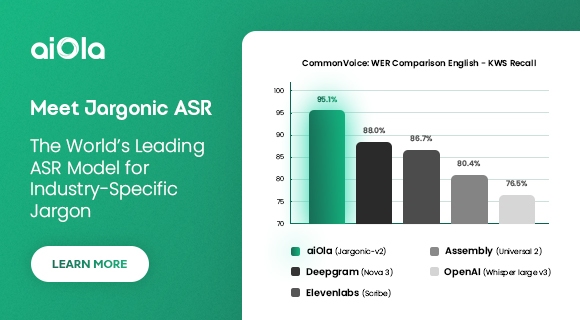

When a user writes SQL in Feldera, we translate it into a dataflow graph: nodes are operators that transform data, and edges represent how data flows between them. Here's a small fragment of such a graph:

Since the Rust code is entirely auto-generated from this structure, we had total control over how to split it up.

Each operator becomes its own crate. Each crate exports a single function that builds one specific piece of the dataflow. They all follow the same predictable shape. The top-level main crate just wires them together.

We still need to figure out how to name these crates. A simple but powerful method is to hash the rust code they contain and use that as the name of the crate.

This ensures two things:

a. We have unique crate names.

b. More importantly: incremental changes to the SQL become incredibly effective

Imagine the user tweaks the SQL code just slightly. What happens is that most of the operators (and their crates) stay identical (the hash doesn't change), and rustc can re-use most of the previously compiled artifacts. Any new code that gets added due to the change will end up generating a new crate (with a different hash).

So how many crates are we talking about for that monster SQL program?

Let’s peek into the compiler directory inside the feldera container:

And then:

That’s right — 1,106 crates!

Sounds excessive? Maybe. But in the end this is what makes rustc much more effective.

Are we done?

Unfortunately, not quite. There are still some mysteries here. Given that we now fully utilize 128 threads or 64 cores for pretty much the entire compile time, we can do a back of the envelope calculation for how long it should take: 25 min / 128 = 12 sec (or maybe 24 sec since hyper-threads aren't real cores). Yet it takes 170s to compile everything. Of course, we can't expect linear speed-up in practice, but still 7x slower than that seems excessive (these are all just parallel rustc invocation that run independently). Similar slowdowns also happen on laptop grade machines with much less memory and cores, so it doesn't just affect very large machines.

Here are some thoughts on what might be happening, but we'd be happy to hear some more opinions on this:

- Contention on hardware resources (the system has more than enough memory but it might contend on caches)

- The file-system is a bottleneck (doubt it since we also tried running this on a RAM-FS and it didn't make a difference, but it could be contending on locks in the file-system code in the kernel)

- Compiling each of the 1k crates now performs some steps many times that get amortized when using a single crate (true, but the slowdown doesn't happen if we compile with -j1, the individual crate compile times are much faster as long as they happen in sequence)

- Linking is the bottleneck now since we added 1k crates? We use mold and we see the total link time is only around 7 sec.

Conclusion

By simply changing how we generate Rust code under the hood, we’ve made Feldera’s compile times scale with your hardware instead of fighting it. What used to take 30–45 minutes now compiles in under 3 minutes, even for complex enterprise-scale SQL.

If you’re already pushing Feldera to its limits: thank you. Your workloads help us make the system better for everyone.

English (US) ·

English (US) ·